The mystery of mutable subresources in Signed Exchanges

What they are, how they break caching, and how to fix them (part 4 of 8)

In the previous part, you learned CORS errors mean (sub)resources are missing from the Google Signed Exchanges (SXG) cache. This and later posts will explain how to deal with that.

I suggest you also read the first two parts to fully understand what is happening. They contain fundamentals on how to adjust your website to be SXG-compatible.

This and later posts summarize my research on issues related to the prefetching of SXG subresources. As I write, there is no official or unofficial documentation on most of the challenges you may encounter. My articles aim to fill that gap.

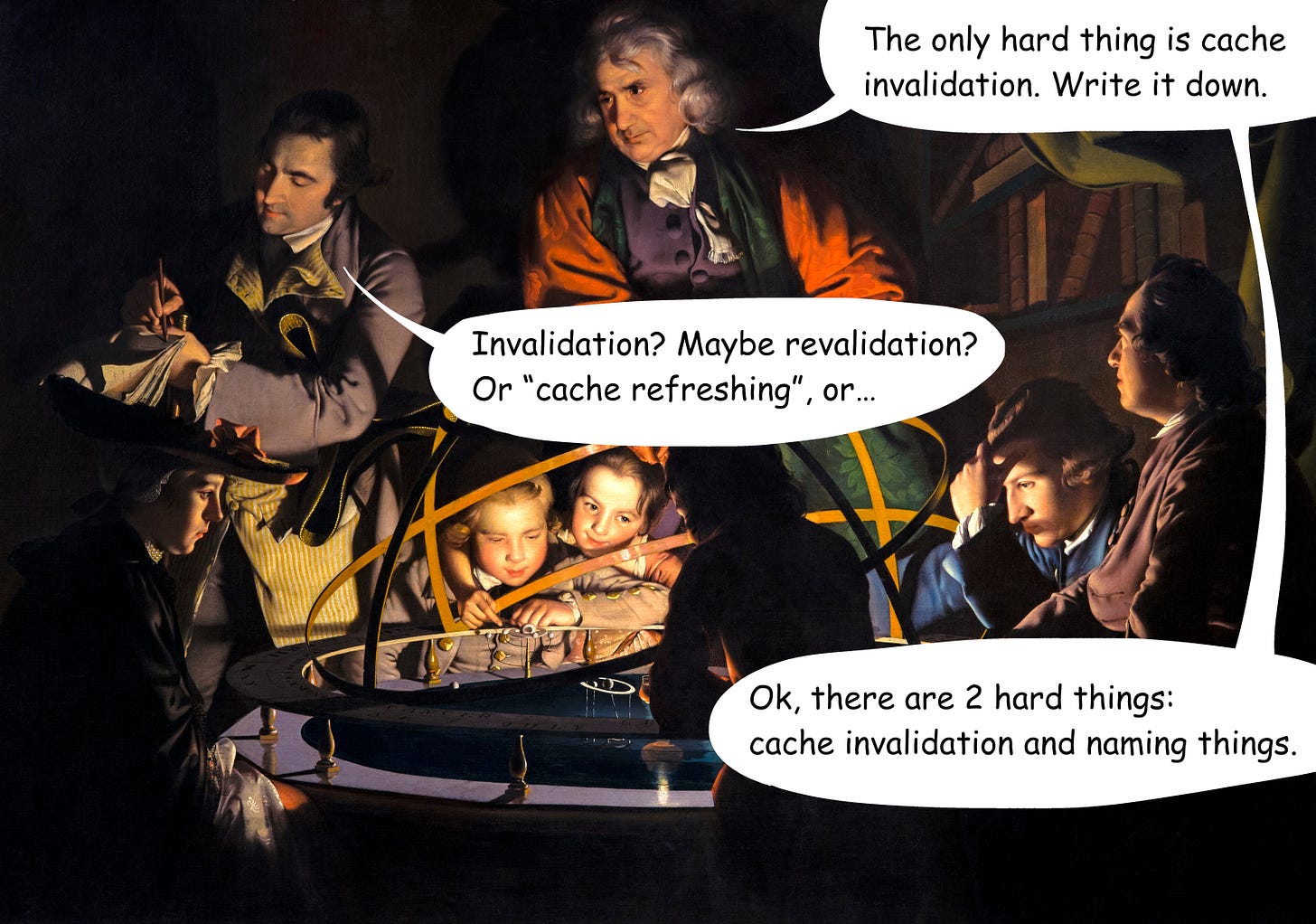

Have a way to invalidate all the caches

When you work with SXG subresources prefetching issues, you may want to make sure you can observe the impact on your changes. It may be non-trivial because of caching.

Many caches sit between your app and you. Here is a minimal set of caches:

Cloudflare caches1

Google SXG cache

Browser cache

Depending on your configuration, you may also use app cache, framework cache, web server cache, additional layers at Cloudflare, and potentially others.

After implementing the changes, you could clear all of the involved caches, but that’s a lot of work and sometimes it’s even impossible.

It’s much easier to just make sure the URLs you are about to test were not accessed before and, therefore are guaranteed to be absent from the cache. After making an SXG-specific change to your app or configuration, make sure that:

You are testing a different subpage of your website. In my case, I can access my app under different URLs for each Polish city & vendor category combination. It gives an almost unlimited set of URLs I can use for testing.

URLs of your assets change. This is important during the reconfiguration of HTTP headers and other subresource-specific modifications explained later. Every framework has its own ways of achieving that.

As my website combines Ruby on Rails and Next.js, I will demonstrate how to invalidate cache in those frameworks. Different frameworks likely have their own ways of achieving that, but some concepts may remain the same.

Invalidate the cache in Rails

Rails makes it easy. As long as you use asset helper methods (image_tag, stylesheet_link_tag, etc) to reference your assets, just increment the version of the assets in config/initializers/assets.rb file:

Rails.application.config.assets.version = '1'Invalidate the cache in Next.js

This framework uses two forms of referencing assets:

Direct: the developer specifies the path to the asset in the CSS, HTML tag, or React component. In the context of SXG, it is used mostly for images and fonts.

Autogenerated: the framework compiles javascript and CSS into chunks and places them inside the HTML head of the document (and our custom Cloudflare worker puts them in the Link header). The developer has limited control over the URLs.

Direct paths

In the case of directly referenced assets, the simple solution is to move all your assets into a subdirectory named after a version and add a version prefix while referencing URLs.

So instead of using:

<img src="/icons/icon.svg">You will use:

<img src="/1/icons/icon.svg">When invalidating the cache, you rename the directory to “2” and update all references using global search-and-replace in your code editor. It’s a good idea to create a function returning the versioned asset URL and store the current version in a global ENV variable:

function assetPath(path) {

return `/${process.env.ASSETS_VERSION}/${path.replace(/^\//, '')}`;

}Make sure to include the current version in your .env file. This way you have a single place where you can update the version (along with renaming the assets version directory):

ASSETS_VERSION=1Referencing the assets would look like this:

<img src={assetPath("/icons/icon.svg")}>Autogenerated paths

Next.js has a built-in assetPrefix configuration parameter that makes it possible to embed version numbers into the URLs. You'll still need to move the assets to the prefixed location during deployment. The specific instructions will vary depending on your deployment method, therefore I won’t provide them here and describe a more generic solution instead.

For autogenerated URLs, the Next.js config has to be adjusted to include the version number stored in the ASSETS_VERSION configuration variable. Extend the configuration object in your next.config.js file using the following template:

const NextMiniCssExtractPlugin = require('next/dist/compiled/mini-css-extract-plugin');

function addVersionToAssets(config) {

const ver = process.env.ASSETS_VERSION;

const addVer = (text) => text.replace('[name]', `[name]-${ver}`);

// Include version in script URLs. For more context see:

// https://blog.pawelpokrywka.com/p/fixing-sxg-prefetching-errors-caused-by-mutable-subresources

const filename = config.output.filename;

const chunkFilename = config.output.chunkFilename;

if (filename && chunkFilename && filename.startsWith('static')) {

config.output.filename = addVer(filename);

config.output.chunkFilename = addVer(chunkFilename);

}

// CSS URLs are handled by a plugin. It needs to be reconstructed

// with a template containing the version prefix.

const index = config.plugins.findIndex(

(plugin) => plugin.constructor.name === 'NextMiniCssExtractPlugin'

);

const template = `static/css/${ver}-[contenthash].css`;

if (index !== -1) {

config.plugins[index] = new NextMiniCssExtractPlugin({

filename: template, chunkFilename: template

});

}

}

const nextConfig = {

// Your current config options

webpack: (config, options) => {

if (!options.dev) addVersionToAssets(config);

// Your current webpack customizations go here

return config;

}

}

module.exports = nextConfigAfter building the app, you will notice that your JS chunks and CSS files have embedded version numbers. To invalidate the cache, increment the version stored in ASSETS_VERSION in your .env file and rebuild.

One of the chunks generated by Next.js, the polyfills chunk is:

loaded with a nomodule attribute, which ensures that only legacy browsers without support for ES modules will download it,

treated specially by Next.js making it non-trivial to include a version in the URL.

Rather than invalidating this particular chunk, it's more efficient to avoid preloading it altogether. Prefetching polyfills for modern browsers is wasteful since they don't need them. The worker that adds the Link header (described in the second part of the series) already ignores <script> tags with the nomodule attribute.

Given all of the above, you don’t need to worry about invalidating the polyfills chunk.

Subresources stored externally

I believe this should be rare, so I won't go into details here. Sometimes, you may need to change the HTTP headers of your subresources hosted externally and proxied using a Cloudflare worker.

In this case, the URLs of subresources should change too.

Your app should include version numbers in URLs of external subresources using an approach similar to the assetPath function I described earlier.

On the worker side, you can implement it by including the version number in the URL map.

const MAP = {

'https://www.your-domain.com/cdn-proxy/PUT_THE_VERSION_HERE':

'https://cdn.com/your-bucket/',

// You may add more mappings such as:

// 'external-sxg-prefetchable-url': 'cdn-url'

}

// Rest of the worker logicThe production-grade solution would use a regexp accepting any version, so the worker code doesn’t need to be updated every time the version changes. I leave it as an exercise for the reader.

In the case of a proxying worker, Cloudflare uses original, CDN-provided URLs as cache keys, not URLs the worker exposes to your users. Even if you modify the exposed URLs, the original URLs won’t change meaning stale cache entries will be used. Remember to purge the Cloudflare cache to avoid that, unless you modify your subresources in the worker only, without changing them on the CDN.

Google SXG cache ingestion process

Before we dive deeply into the causes of missing entries in the SXG cache, it’s critical to understand how Google ingests your pages and how it cooperates with Cloudflare.

Reverse engineering the process

I modeled this process using reverse engineering. It was created out of necessity, to help me understand the issues I was experiencing. I was performing requests and observing responses. Then, I was analyzing logs from the server and Cloudflare.

As I write this post, the resulting process isn’t documented anywhere else.

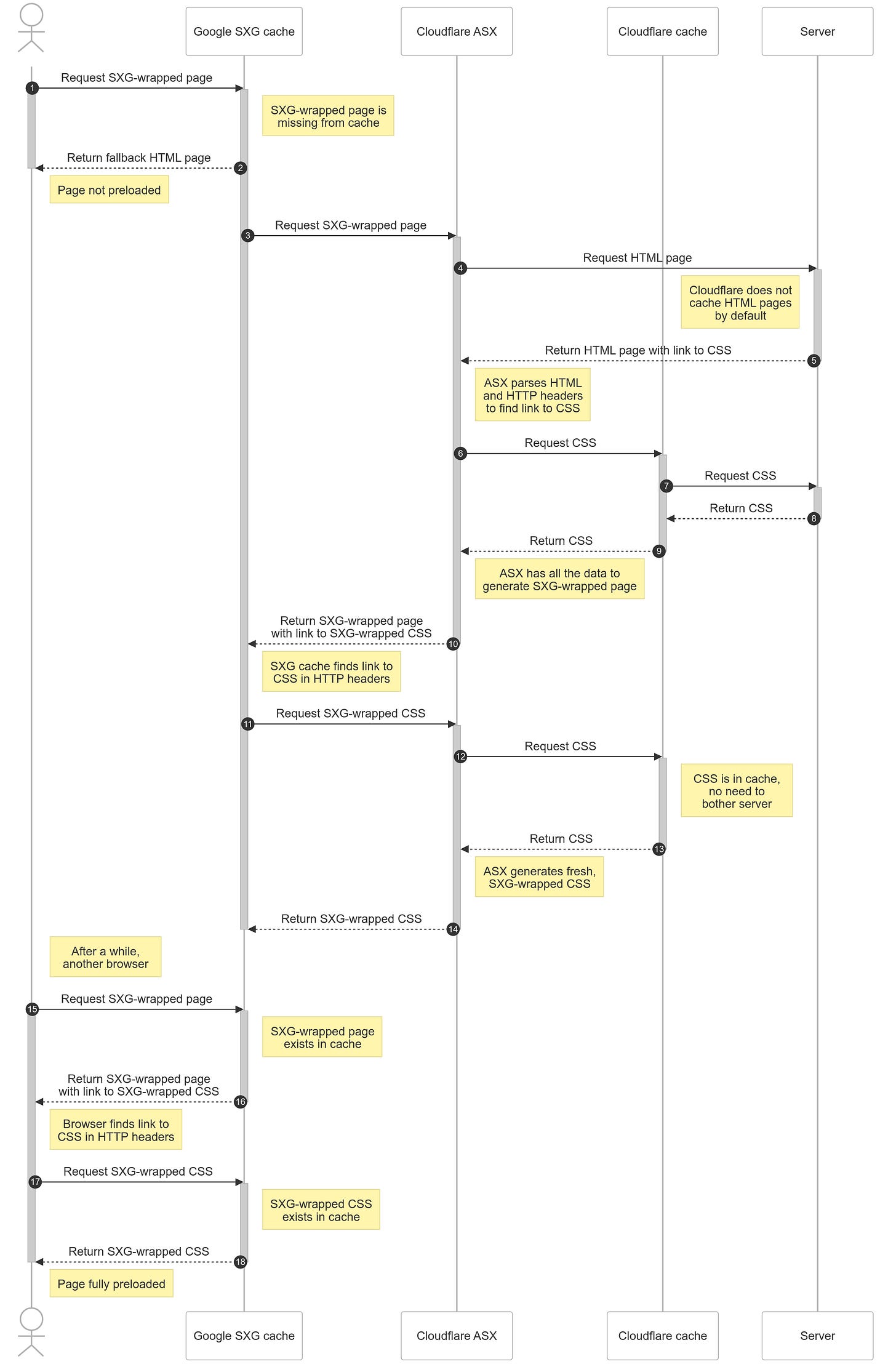

After landing on the Google search results page, this page tries to prefetch a result the user is predicted to click. The following diagram illustrates what happens. For simplicity, it assumes the page depends on only one subresource (CSS file):

When the first user requests the page, the prefetching initially fails because the page has not yet been cached. However, this request triggers the cache population process. Consequently, the second user (starting at step 15) benefits from the now-prefetched page, experiencing faster load times.

You may want to open the diagram in a new tab to avoid repeated scrolling—it will be easier to switch between tabs as I reference it throughout this discussion.

Understanding the Google SXG cache population process (steps 3-14) is crucial in debugging potential errors. Observe the clean separation of responsibilities:

Cloudflare Automated Signed Exchanges (ASX) is a layer wrapping HTTP responses into SXG and doing nothing else.

Cloudflare cache knows nothing about SXG, and neither does the server.

Requests differences

During the cache ingestion, subresources are requested by different systems for different purposes:

Cloudflare ASX for computing integrity hashes that will be included in the SXG-wrapped page,

Google SXG cache for making subresources available to browsers for prefetching.

Let's compare the requests generated by these systems to better understand potential issues.

Here are interesting headers from an example request made by Cloudflare ASX:

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

Accept-Encoding: gzip, br

Cf-Connecting-Ip: 2a06:98c0:3600::103Observations:

The request lacks a User-Agent header.

The Accept header doesn’t mention Signed Exchanges at all.

The IP address belongs to Cloudflare.

Let's examine some notable headers from a sample Google SXG cache request:

Accept: */*;q=0.8,application/signed-exchange;v=b3

Cf-Connecting-Ip: 66.249.72.7

User-Agent: Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.6533.119 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)Observations:

The request lacks an Accept-Encoding header.

The Accept header indicates that Google prioritizes SXG over other formats.

The IP address belongs to Google.

Watching the SXG cache ingestion live

I created a tool to demonstrate the ingestion process. It allows you to trigger the SXG cache population and observe requests made by Google and ASX. The tool uses the presence or absence of the User-Agent header to determine if the request is performed by Google or Cloudflare respectively.

Using the tool you may notice that trying to prefetch a page already present in the Google SXG cache (step 15) often has a side-effect of triggering the cache population process to refresh the entry. I intentionally didn’t include it on the diagram to keep it readable.

Keep in mind that the tool bypasses Cloudflare's cache, which is both a critical component in real-world applications and a significant indirect source of SXG-related errors.

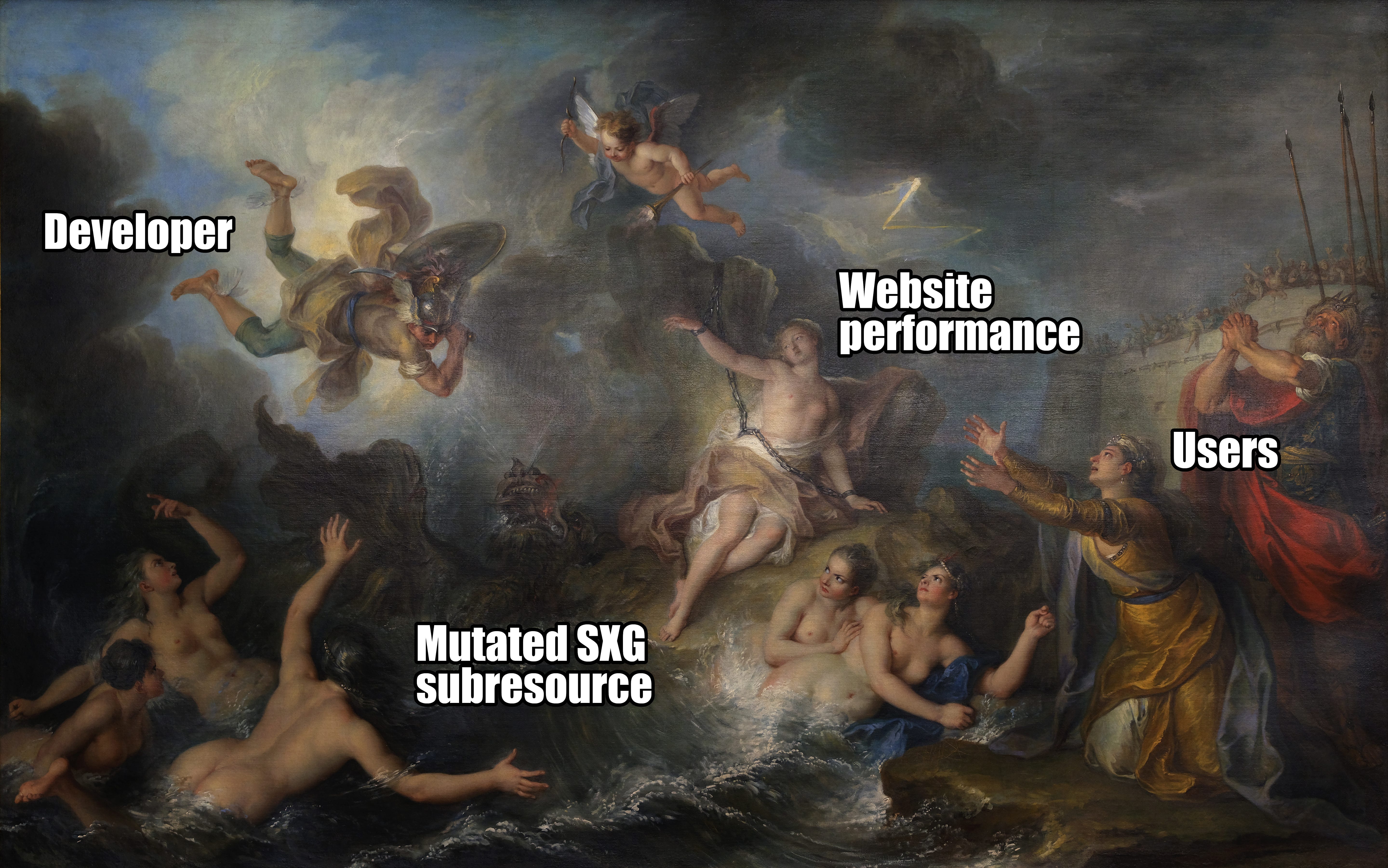

Mutable subresources

I define the mutable subresource, as one that changes over time but remains accessible under the same URL. In contrast, an immutable subresource doesn’t change.

From my experience, most of the hard-to-debug SXG issues are caused by mutable subresources.

Why does a mutable subresource break things?

Let’s assume we have a page with a subresource that changes on each request. It may be the Web 1.0 visit counter - each time it is requested, it returns an incremented number as an image. The server keeps the current number, increases it on every request, generates a GIF, and returns it.

We need to disable the Cloudflare cache. Otherwise, it would cache the counter, effectively stopping it.

Please remember, this is just an example prepared to illustrate the point. It doesn’t make much sense to implement it in real-world use cases.

What will happen, when Google tries to fetch this and put it into its SXG cache?

According to the diagram above:

It requests the page from ASX (step 3).

ASX requests the page from the server and the counter image subresource (steps 4 and 6). The server generates an image presenting number 1.

ASX generates an SXG-wrapped page, includes the subresource URL along with its integrity hash, and returns it to Google (step 10).

Google parses the SXG-wrapped page, finds it depends on the subresource, and downloads it from ASX (step 11).

As Cloudflare cache is disabled, ASX downloads it directly from the server (step 12). This time, the server generates an image with the number 2.

ASX wraps the image into the SXG package and returns it to Google (step 13).

Google compares the integrity hash of the downloaded subresource with the integrity hash stored in the SXG-wrapped page. As those differ, Google rejects the subresource as invalid and doesn’t store it in the cache.

The integrity hashes were different because those were different images. First represented number 1, second number 2.

Still, the SXG-wrapped page will be stored in the Google SXG cache. However, it will depend on a subresource that can’t be prefetched, because it’s missing from the cache.

On Google search results, when prefetching the page, the browser will be instructed to prefetch the subresource located under a URL computed from the subresource integrity hash included in the SXG-wrapped page. As the URL doesn’t contain a subresource, the fallback HTML page will be returned instead causing a CORS error in the browser.

But my subresources are immutable!

If you follow best practices in web development, you probably try to keep your assets immutable. The reason is to prevent issues caused by stale copies stored in caches.

Frameworks such as Rails and Next.js offer include built-in solutions. The idea is to bind the asset URL and its content by making the digest of the content part of the URL. This way changing the content changes the URL, so the asset won’t mutate.

It works well with the original stale-cache issue, but…

SXG subresource > file content + URL

The above approach doesn’t consider HTTP headers returned along with the asset. Those headers are part of the SXG subresource. If one of the headers changes, the integrity hash changes as well.

Many HTTP headers are set by the web server, outside of the web application. The framework doesn’t control all of them, therefore it can’t include them in the digest.

Cloudflare ASX partially solves that by stripping headers known to change frequently, such as Date, Age, or X-Request-Id from subresources. But if your assets use other unstable headers, you may experience issues, as shown in this demo.

Doesn’t cache fix that?

As I wrote earlier, subresources should be cachable. If you want to see what happens, if the Cache-Control header is invalid, here is a demo.

Cloudflare has an HTTP cache enabled by default. Even if a given subresource changes on each request, the first response will be stored in the cache (steps 6-9 on the SXG cache population diagram), and later requests will return the cached entry (steps 12-13).

HTTP headers are cached too. Therefore, from the Google SXG cache perspective, the mutable subresource doesn’t change. That’s good!

Things become interesting, if the subresource gets modified after being retrieved from the cache or if the cache infrastructure has issues.

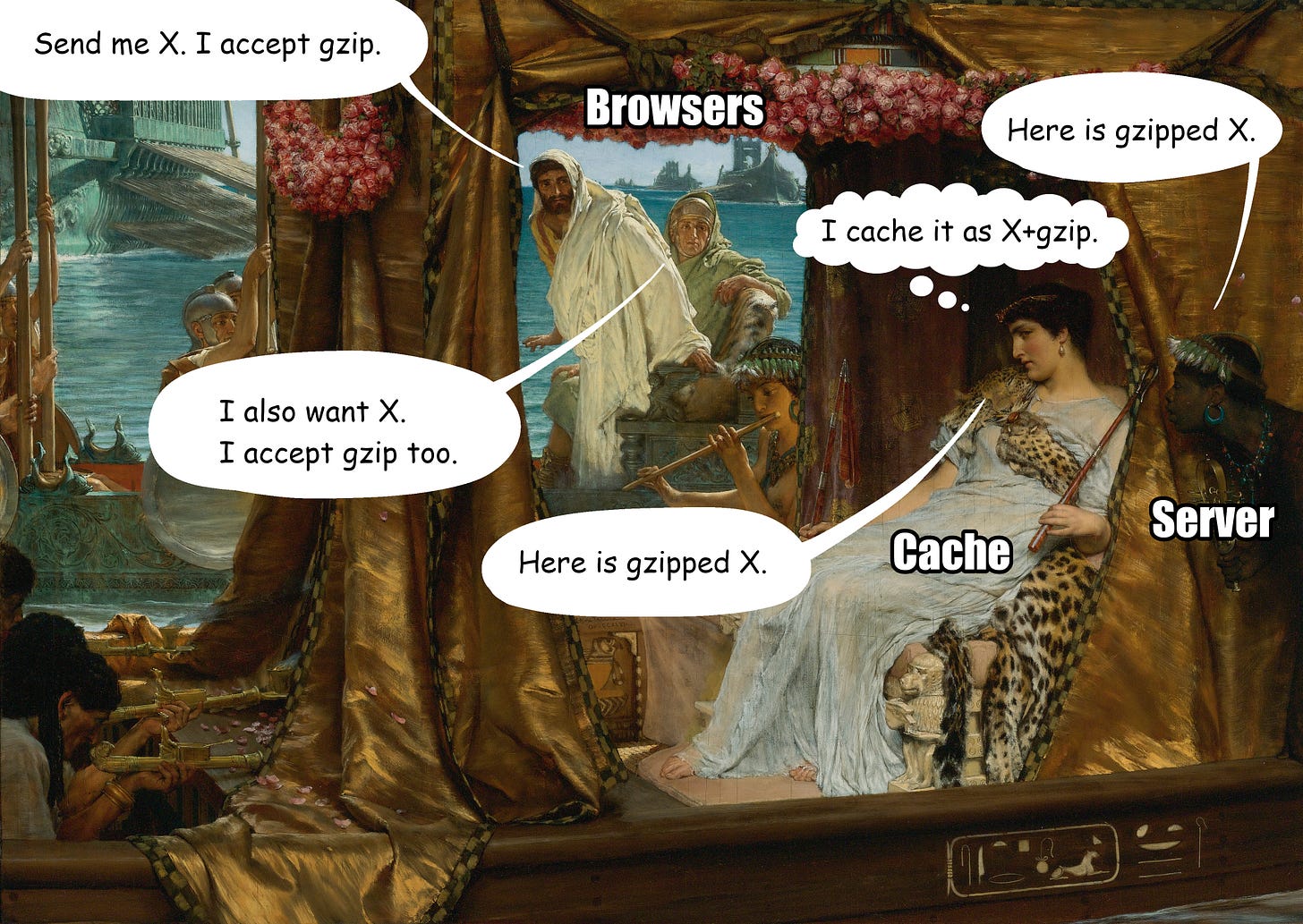

Compression negotiation

When performing an HTTP request, the browser typically uses the Accept-Encoding header to tell the server what compression methods it supports. It allows the server to use the best compression the browser understands, for example:

If the browser supports zstd and gzip, the server will respond with a zstd-compressed response.

If the browser supports only gzip, the server will respond with it.

The server will unilaterally decide if and how to compress the response if the browser doesn't set the Accept-Encoding header.

The problem arises when caching is involved. The cache stores the response under a cache key, typically created from the URL. However, accessing the same URL may result in a compression mismatch. That’s why the component performing the compression (the web server or Cloudflare proxy) should inform the cache, the URL is not enough to construct the cache key.

Vary HTTP header

It does it by setting the Vary header to a list of request headers that may impact the response. In the case of compression, it puts Accept-Encoding there:

Vary: Accept-EncodingImportantly, the Vary header may be omitted if the request doesn’t contain the Accept-Encoding header.

As HTTP headers are part of the subresource, the presence of the Vary header impacts the integrity hash. It could be therefore possible to generate two different versions of the same subresource:

with Vary header set, if the request contains an Accept-Encoding header,

without it otherwise.

Fortunately, Cloudflare ASX doesn’t allow it by removing the Vary header from the response. However, I found one edge case when it’s not the case.

Subresource mutation caused by ASX itself

The issue occurs when the subresource to prefetch is behind a worker and uses Cloudflare cache. There are at least 2 reasons for such a setup, both were described earlier:

rewriting URLs of CDN-stored assets,

adding a Link header to all HTML responses by passing all traffic through the worker and letting it skip assets instead of configuring worker routing to avoid assets being processed.

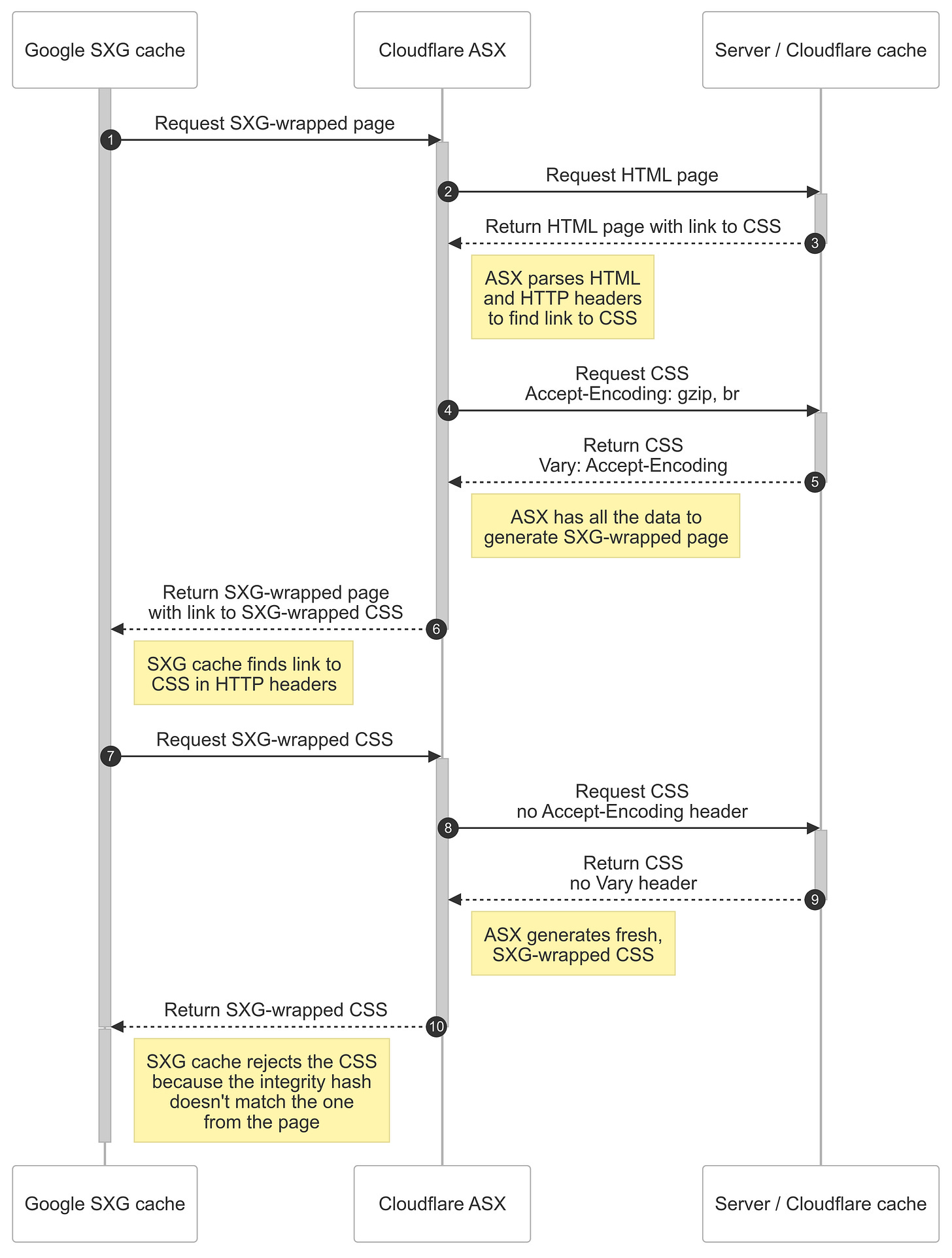

I used reverse engineering again to model what happens. Here is a simplified version of the previous diagram:

First, ASX asks for a subresource with an Accept-Encoding header set to "gzip, br" (step 4). Therefore, as we discussed, the response includes Accept-Encoding in the Vary header.

The integrity header of the subresource is calculated and put in the Link header of the SXG-wrapped page returned to the Google SXG cache in step 6.

Next, the request for subresource comes from Google (step 7) and is proxied by ASX (step 8). Google doesn't set the Accept-Encoding header and ASX honors the original request’s headers2. Therefore the response doesn't include the Vary header (step 9).

When Google receives the subresource (step 10) it compares its integrity hash with the one from the Link header of the SXG-wrapped page it received in step 6. As they don’t match, the subresource is discarded and not cached.

The subresource is not cached, but it’s still referenced from the cached SXG-wrapped page. When the browser tries to prefetch it, a fallback page is returned and a CORS error happens.

It’s worth noting, this issue doesn’t happen when:

the Cloudflare cache is disabled—probably because Vary is a cache-related header,

not using a worker—probably because of interactions between a worker and ASX (ASX being a special instance of a worker).

To see it in action, take a look at the demonstration.

Workarounds for ASX bug

Until Cloudflare fixes this issue, the simple solution is to set the Vary header for all static assets responses, even when requests don’t specify the Accept-Encoding header.

Nginx

For assets included with the application, you can use the following nginx configuration snippet in locations containing static files:

location ~* \.(?:css|js|gif|png|jpeg|jpg|ico|ttf|woff|woff2|svg)$ {

# Always set Vary header, because of the issue in Cloudflare ASX

# when used along with workers

more_set_headers 'Vary: Accept-Encoding';

# The rest of assets-related configuration, such as cache expiration

}Of course, the above solution doesn’t work for assets stored in the CDN and proxied through a worker.

Worker

Therefore, the more elegant solution is to implement the workaround in the worker itself. This way the fix doesn’t pollute the system and is contained closest to the cause of the issue (the worker). The downside is you need to remember about it in every worker if you use many.

Here is a minimal worker setting the Vary header:

function addVaryHeader(response) {

const vary = response.headers.get('vary');

if (!vary || !vary.toLowerCase().includes('accept-encoding')) {

// Set the Vary header because of the issue in Cloudflare ASX

// when used along with workers. For more details see:

// https://blog.pawelpokrywka.com/p/fixing-sxg-prefetching-errors-caused-by-mutable-subresources

const newResponse = new Response(response.body, response);

newResponse.headers.set('vary', 'Accept-Encoding');

return newResponse;

}

return response;

}

export default {

async fetch(request) {

const response = await fetch(request);

// Add Vary header to all responses.

// For production, I would suggest doing so only for assets.

return addVaryHeader(response);

},

};I won’t rehash how to create and deploy Cloudflare workers. I leave it as an exercise for you to merge this code with your workers, but it should be easy. Just wrap the response you are about to return with the addVaryHeader() function for static assets.

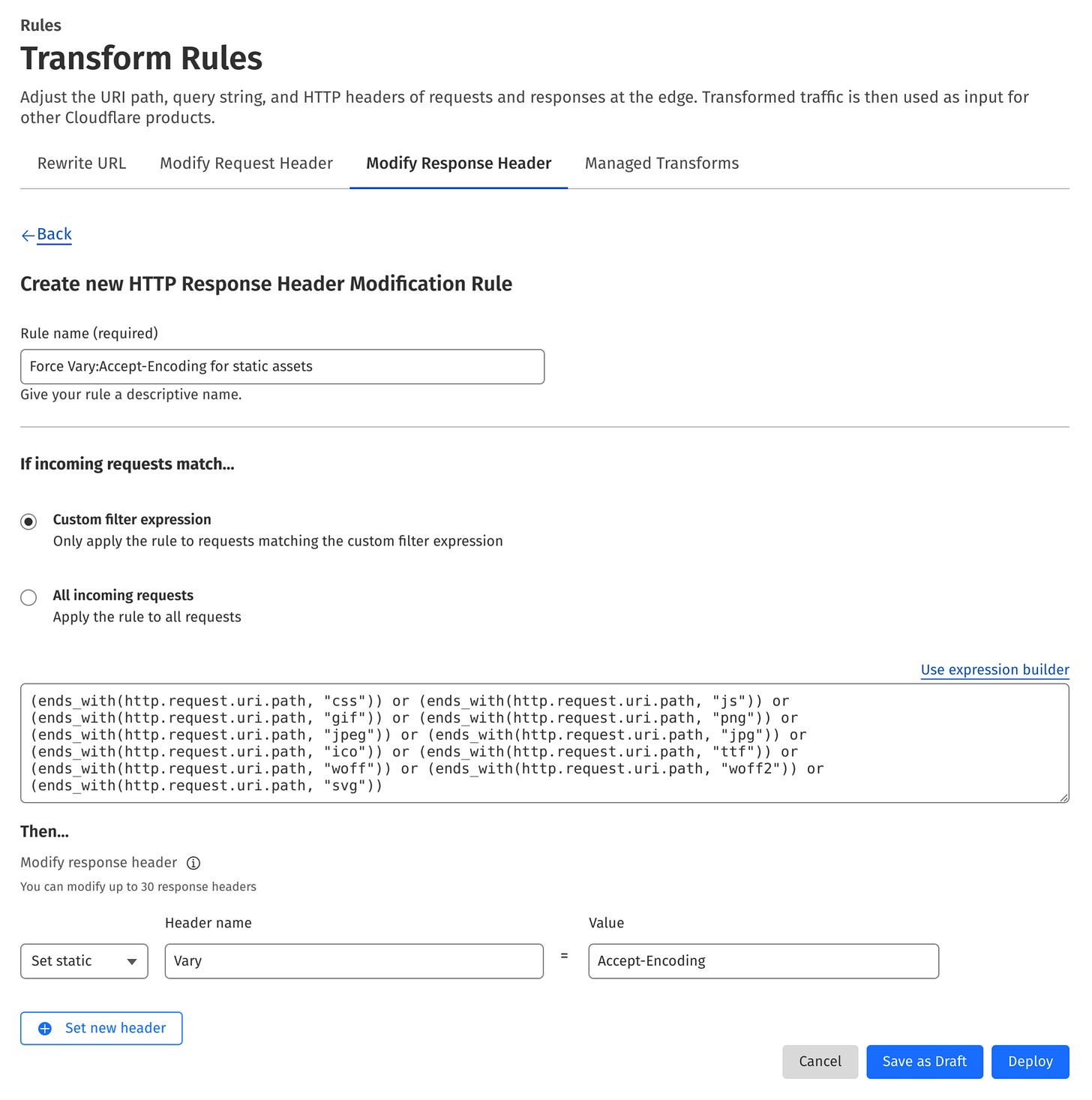

Transform rule

Alternatively, you can create a Cloudflare transform rule that adds the Vary header to all responses for static assets. The adventage of this approach is that you need to do this once and it benefits all the workers. To do that:

Open your website in the Cloudflare dashboard.

Go to Rules → Transform Rules → Modify Response Header.

Hit the Create rule button.

Provide a name for your rule, for example: Force Vary: Accept-Encoding for static assets.

Click the Edit expression link in the Expression Preview section and paste the following expression into the text area:

(ends_with(http.request.uri.path, "css")) or (ends_with(http.request.uri.path, "js")) or (ends_with(http.request.uri.path, "gif")) or (ends_with(http.request.uri.path, "png")) or (ends_with(http.request.uri.path, "jpeg")) or (ends_with(http.request.uri.path, "jpg")) or (ends_with(http.request.uri.path, "ico")) or (ends_with(http.request.uri.path, "ttf")) or (ends_with(http.request.uri.path, "woff")) or (ends_with(http.request.uri.path, "woff2")) or (ends_with(http.request.uri.path, "svg"))In the Then section select Set static from the menu and type Vary into the Header name and Accept-Encoding into the Value.

The form should look similar to the one below:

If everything is ok, hit the Deploy button. From now on, your assets will always have the Vary header set to Accept-Encoding.

Cache invalidation reminder

It’s a good idea to invalidate the cache after performing those changes as described at the beginning of this post. Otherwise, you may need to wait up to 7 days for the results.

HTTP/2 Prioritization

In 2019 Cloudflare announced a new feature called Enhanced HTTP/2 Prioritization. It promises improved page load times by optimizing asset delivery so that the browser fetches the most important assets before others.

The same day, they introduced a parallel streaming of progressive images. It uses the same mechanism but for data ranges within individual image files. For example, it prioritizes the beginning of a JPEG file containing a low-resolution image. In effect, on pages with more than one image, the browser first renders all of them in low quality, then continues to download them, gradually improving the resolution.

Those features promise improvements in the UX, so I was eager to turn them on. Both are controlled by one switch:

SXG doesn’t like HTTP/2 prioritization

I found a lot of prefetching errors were related to JPEG files. After examining the issue, I found that responses for JPEG files contained an HTTP header missing from other responses:

Cf-Bgj: h2priAccording to a response from a Cloudflare employee, the h2pri means it’s been processed to support HTTP/2 prioritization for Progressive Streaming of JPEGs.

To make things more interesting, the header is set on the second response (cache HIT), while the first response (cache MISS) doesn’t include it.

Processing takes some time, therefore I assume it is being put in the background and the first response doesn’t wait for it to keep the latency low. The processed image lands in the cache and that’s the reason it includes the Cf-Bgj (Cloudflare Background Job?) header.

The subresource mutates on the second request. The issue is very similar to the previous one with the Vary header, but this time the header name is Cf-Bgj. Mutable subresource can’t be fetched by Google SXG cache, the HTML document depends on it, therefore SXG prefetching breaks.

Workarounds for a Cloudflare bug

I prepared a page containing a demonstration of the issue.

I was tempted to use Cloudflare transform rules to remove the Cf-Bgj header. Unfortunately, it’s a special header that can’t be removed. Trying to create a transform rule would result in:

'remove' is not a valid value for operation because it cannot be used on header beginning with 'cf-'From my experiments, the issue manifests only if all three conditions apply to a given JPEG subresource:

Enhanced HTTP/2 Prioritization feature is turned on. It is responsible for setting the Cf-Bgj header.

Cloudflare cache is in use. That’s probably related to the sidenote above about latency. Image processing might introduce latency if done synchronously without caching.

Cloudflare worker is not used. I suspect it’s related to workers being allowed to manipulate HTTP/2 prioritization by setting the Cf-Priority header. Maybe there is a cleanup logic for removing internally used headers, that include Cf-Bgj, and this logic gets fired only when using workers.

Turning off asset caching might be a less-than-optimal idea. But, if you don’t use workers for your assets, you may re-evaluate your decision to make the issue disappear. Any worker will do, the minimal I could think of is the following one-liner:

export default { fetch: (r) => fetch(r) };If you still want to avoid workers, for example, due to additional financial costs, the remaining solution is to disable the Enhanced HTTP/2 Prioritization feature.

When writing this post, I decided to test, if the feature works as advertised. I utilized the test page by Patrick Meenan and followed his instructions. Unfortunately, I was unable to see any improvements, both in terms of assets prioritization and progressive image streaming. The results look the same, no matter if the Enhanced HTTP/2 Prioritization is enabled or disabled.

Remember to invalidate the cache after implementing the changes to see the results earlier.

To be continued…

There are still a lot of things to cover. In the mutable subresources category of errors, I’ve identified one more. It was particularly tricky and hard to reproduce. I will describe how I found and fixed it in the next post.

There are at least two Cloudflare caches: the official one and the hidden one. I will describe the latter in the upcoming post.

Looks like a bug because Cloudflare should always set the Accept-Encoding header according to documentation.