Other causes of Signed Exchanges errors

From various limits to global outages—how to tackle SXG challenges (part 6 of 8)

You learned about Signed Exchanges (SXG) prefetching errors caused by mutable subresources in the two previous posts. Now, you understand a good SXG experience requires keeping your subresources unchanged over time. Also, you know how to deal with threats to their immutability.

In this post, I will explore the remaining errors I identified. As I write, there is no documentation on most of these or the documentation is outdated.

Just getting started with improving website performance? Start here with my beginner-friendly introduction to Signed Exchanges. If you're already familiar with SXG, please continue to the technical details below.

How big Google's mouth is?

Trouble with images continues

Even after implementing a workaround for the progressive loading issue, I was still struggling with prefetching images.

This time however the issue had different characteristics. Instead of occurring for every JPEG file, it affected only a few specific images.

After inspecting them I found a common trait: they were huge and it was clearly causing the issue!

My website uses responsive images to look sharp on a 4K screen while loading fast on low-end devices. It seems the image-processing solution we use for optimizing images uploaded by our users sometimes fails which results in unoptimized images. An unoptimized high-resolution image could easily reach 1 MB and above.

Later I found the same issue can happen with other subresource types. In my case, some of the javascript chunks produced by Next.js were too large.

SXG maximum size

The official Google documentation mentions 8 MB as the maximum SXG size. Interestingly, this value is honored by Cloudflare ASX (it can produce SXGs up to this size), but not by Google itself. It will refuse to store such large files.

I had to determine it experimentally, by generating files of different sizes and testing which ones were cached by Google. Here is the result:

The maximum size of a file that can be stored in a Google SXG cache is roughly 1044 KB (1044000 bytes).

You may ask why it’s such a weird number, not a round 1 MiB (220 bytes) or at least 1 MB (106 bytes). I think Google limits the size of the entire cache entry to 1 MiB and apart from the actual file contents, it also includes:

HTTP response headers,

SXG metadata and cryptographic stuff,

maybe some additional, Google cache’s specific metadata.

The size of those depends on various elements, therefore it’s impossible to reliably determine the exact maximum file size.

To ensure a great user experience and minimize data usage, keep your subresource sizes well below the limit. While Google allows subresources up to this size, smaller files help protect users from excessive data consumption and lead to faster page loads.

Size of HTML document

Though unlikely, your HTML documents should not exceed the limit of 1044 KB too. Otherwise, you will get one of the following messages in the Warning HTTP header of the response generated by the Google SXG cache:

199 - "debug: content has ingestion error: Not a valid signed-exchange."199 - "debug: content has ingestion error: Error fetching resource: missing magic prefix; extracting prologue"You can check it yourself on the demo page I prepared.

How to keep your subresources small

The only proper solution is to ensure your subresources don’t exceed the limit. If some of the images are not optimized, optimize them. Consider decreasing image quality and/or resolution if the file size remains over the limit after optimization.

Try to keep your scripts compact. Minification is a standard practice, but it has to be mentioned here in case you don’t use it for some reason. Avoid using large libraries that need to be included in your bundle. Consider dynamic loading for less frequently used logic.

You may also want to adjust how the scripts are split into chunks to ensure no chunk exceeds the limit. For example, Next.js won’t split the _app by default. To force it to do it, you can extend your next.config.js using the following template:

function forceChunking(config, pages) {

const originalChunks = config.optimization.splitChunks.chunks;

config.optimization.splitChunks.chunks = (chunk) => {

// Allow to split certain pages because it may eventually

// become too large for SXG. For the full context see:

// https://blog.pawelpokrywka.com/p/other-errors-with-signed-exchanges

if (pages.includes(chunk.name)) return true;

return originalChunks(chunk);

}

}

const nextConfig = {

// Your current config options

webpack: (config, options) => {

if (!options.isServer) forceChunking(config, ['pages/_app']);

// Your current webpack customizations

return config;

}

}

module.exports = nextConfigOther frameworks that use webpack underneath may experience similar issues, and the above webpack configuration change may help. However, adjustments may be needed.

Choosing not to prefetch

If your subresources are still too large, the solution of last resort is to not prefetch them. This will impact your page load speed, but at least other subresources will be prefetched.

To do this, you can delete the <link> tag, but if you want to retain prefetching your assets for Early Hints (I mention them in the second part) and for standard, non-SXG page prefetching, there is a better option.

Just add the data-sxg-no-header attribute to <link> tags you want to exclude from SXG but keep for everything else:

<link rel="preload" href="2big.jpg" as="image" data-sxg-no-header />The attribute is documented here.

The idea for a potential Cloudflare-side solution

Cloudflare already limits SXG it generates to 20 subresources to ensure compatibility with Google's SXG cache. It would be consistent to also exclude subresources during SXG generation if they exceed Google SXG cache's size limit.

403 forbidden errors

Sometimes, during debugging, you may encounter this message in the Warning HTTP header of the response generated by the Google SXG cache:

199 - "debug: content has ingestion error: Error fetching resource: origin response code = 403"This means instead of a normal HTTP/2xx response, Googlebot got an HTTP/403 (forbidden) response while fetching the page to be put into the Google SXG cache later.

Unless your app generated an HTTP/403 error (which may happen for example on pages requiring being logged in), the most probable explanation is that Cloudflare blocked this request.

Why does Cloudflare block some requests?

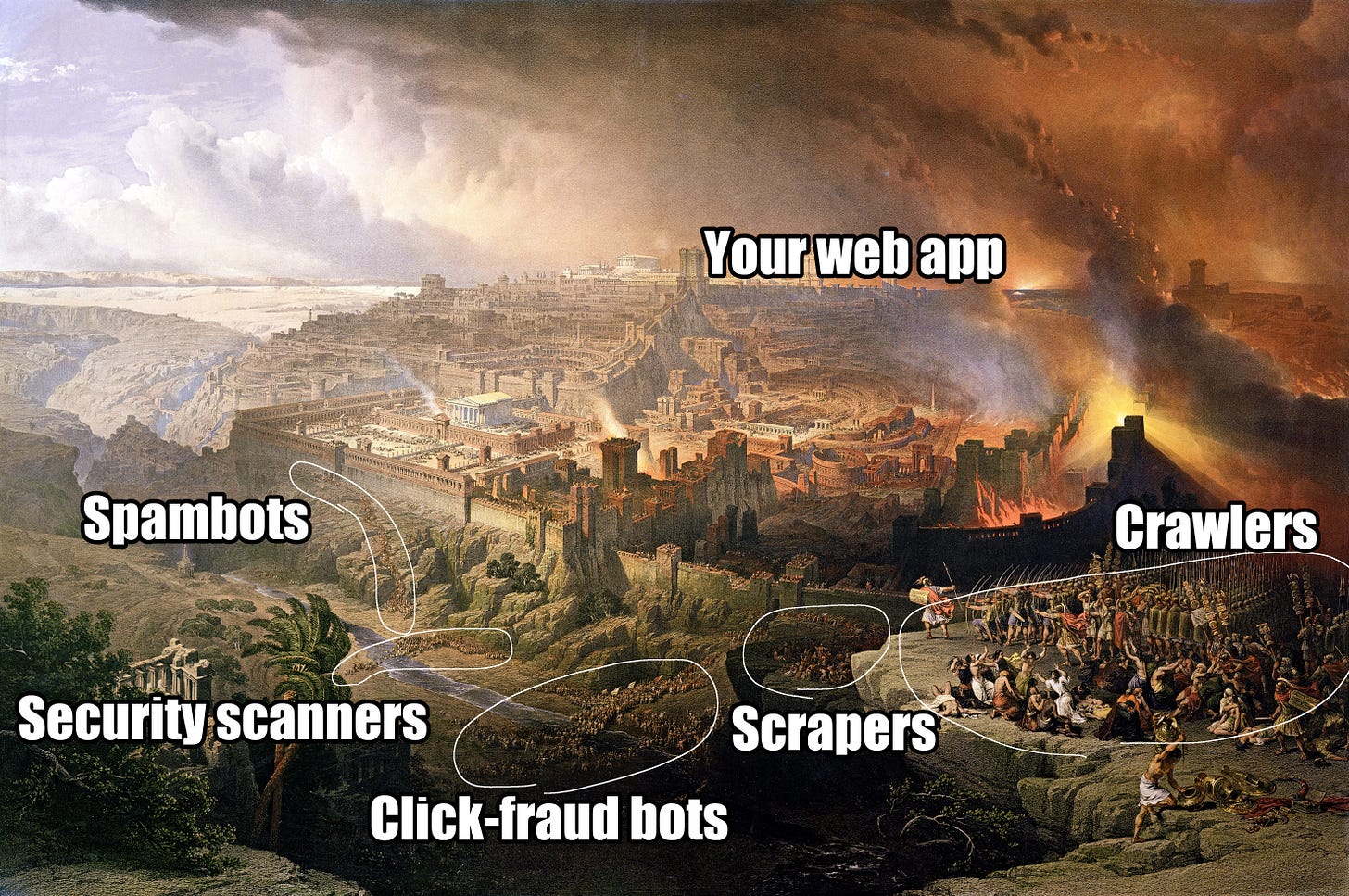

Cloudflare allows website owners to protect their property against bots.

When enabled, Cloudflare scans requests for signs of automated traffic. Depending on your plan, you can choose what to do with bot-generated requests. For example, in the Pro plan (the minimum for SXG support) you can allow bots, block them, or challenge them with a captcha. If a person is mistakenly classified as a bot, using a captcha enables them to still access the site.

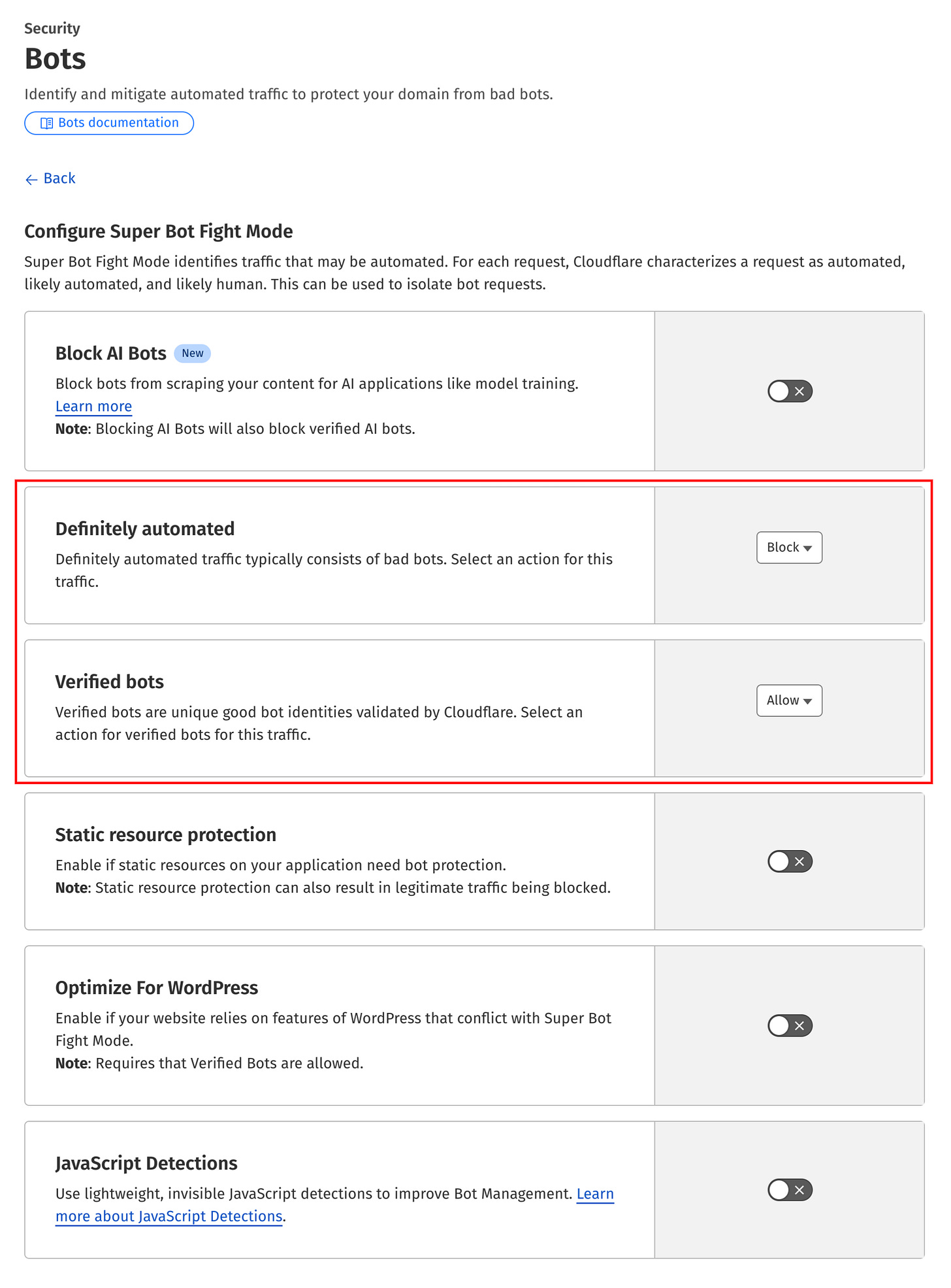

As some bots are useful (such as Googlebot), Cloudflare gives you an option to allow so-called good bots. You can see all the options below:

The recommended configuration for public websites is to block or challenge malicious bots while allowing legitimate ones like Googlebot. However, even with these settings, Cloudflare may still inadvertently block Googlebot's access!

Why does Cloudflare block Googlebot?

First, I thought the blocking was happening because I was repeatedly triggering Googlebot to perform many debug requests to strange-looking test URLs. Maybe Cloudflare uses machine learning to distinguish those from normal Googlebot traffic?

Then, I opened the Google Search Console and saw a lot of HTTP/4xx responses for normal, non-debug Googlebot requests. Up to 5% of the traffic was blocked. It wasn’t looking good. Blocking even some of the Googlebot requests is dangerous for SEO.

When I allowed all bots, the issue disappeared.

A better solution

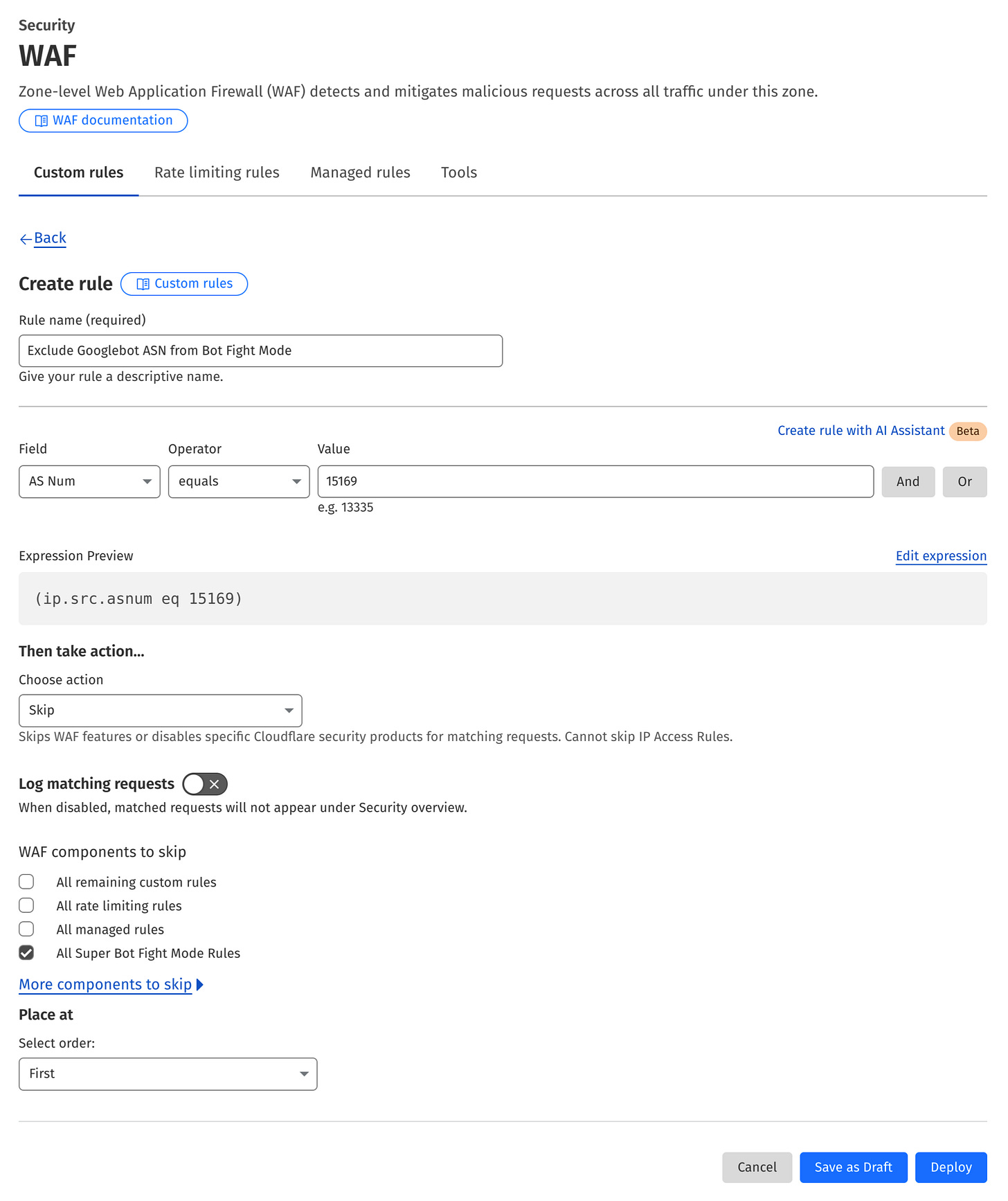

The bot-blocking feature is great; I didn’t want to disable it. However, I didn’t want to prevent Googlebot from accessing my site, which led me to the solution based on the Cloudflare Web Application Firewall (WAF).

I created a rule that skips bot blocking for traffic from Google IP addresses.

To do that, go to the Security section and open the WAF submenu. Choose the Custom rules tab and hit the Create rule button. Then, configure the rule as shown below and click the Deploy button:

Instead of specifying an ever-changing list of Google IPs, I matched against a single Autonomous System (AS) number. You can use RIPEstat or any other online tool to find the number assigned to Google.

Google SXG cache ingestion issue

One day I observed a dramatically increased number of SXG prefetching errors. The following days were even worse and finally, SXG stopped working entirely. I believe it was caused by the global SXG cache outage.

It lasted for about 2 weeks. I experienced it only once, but potentially it may happen again. If your SXG metrics go crazy without any sensible reasons, checking if the Google SXG cache works may be a good idea.

Sometimes, Google SXG cache ingestion rate is so slow, that it seems dead. This is likely due to the cache being under heavy load. I’ve occasionally experienced this during peak hours, especially when the U.S. wakes up.

Temporary errors

Even if you do everything correctly, you may encounter this message in the Warning HTTP header of the response generated by the Google SXG cache:

199 - "debug: content has ingestion error: Not a valid signed-exchange."The warning will eventually vanish in a few hours max. It may be related to the number of new (not cached yet) subresources the page depends on. If the number is high enough, the error may occur temporarily.

If you know some errors are temporary, you won’t waste time trying to find and fix the inexistent cause.

Note however, the exact same error may communicate you have to fix your page because Cloudflare fails to generate SXG version of it. Please refer to the first post in the series on how to adjust your page to be SXG-ready.

How many subresources are too many?

In the official documentation, you will find that the maximum number of subresources is 20. This is a hard limit you won’t be able to exceed.

However, there is also a soft limit. Exceeding it will cause temporary errors until all the subresources are cached.

In my experiments, the error mentioned above may manifest itself when the page introduces 14 or more new subresources. By testing various scenarios, I came to the following formula:

2 x new + existing < 34If you substitute new and existing variables with your values and the formula is true, your page should have no issues. For example, having 13 new and 7 existing subresources will be ok, while 15 new and 5 existing subresources may lead to (temporary) problems.

Cloudflare ASX utilization fluctuations may impact the formula, so take it with a grain of salt.

Why do these errors occur?

Based on my research, I conclude the cause is related to the latency guarantees of Cloudflare ASX. I believe there is a maximal latency ASX could introduce while generating an SXG-wrapped page. ASX tries to avoid exceeding it by having a plan B: returning the original page, without SXG wrapping.

If this happens, the Google SXG cache receives a response of a different type than expected. That’s why the warning about an invalid signed exchange appears.

Calculating integrity hashes from subresources takes time, so Cloudflare ASX uses a special-purpose cache I discovered occidentally. If all the integrity hashes are cached, SXG will be generated quickly. The more integrity hashes have to be calculated, the more time it takes, eventually exceeding the limit.

Retrieving the integrity hash from the ASX cache takes some time too. Although it’s probably a fraction of the time spent to calculate an integrity hash, it’s non-zero. That’s why the number of existing (cached) subresources is also taken into account.

In my tests, the size of the subresource doesn’t impact the ASX behavior—the formula stays the same. It probably means ASX estimates the time it will take to include all the integrity hashes based on the number of new and cached subresources instead of actually measuring how long it takes.

Things you can’t control directly

Some errors happen because of reasons outside of your control, at least partially. Here are the scenarios I identified, but there may be others:

Not enough time

The user may click on the Google search result before all the subresources are prefetched. This could be due to a slow or unreliable connection and/or the user acting very quickly.

While you cannot control either of these factors, you can attempt to reduce the total size of the subresources to be prefetched. You may need to balance the prefetched page completeness and SXG error rate.

New tab

The user may open the page in a new tab. In my tests, it breaks the prefetching of subresources.

Interestingly, it doesn’t impact the prefetched HTML page—it is still used by the browser. Only subresources are dropped.

My unconfirmed explanation of this phenomenon is that the Chrome browser uses different caches for SXG pages and SXG subresources. The former is similar to a normal browser cache—it’s shared between tabs. The latter uses an isolated, per-tab cache, probably for the same reason as the all-or-nothing principle I described in the third part: privacy.

Browser deciding not to prefetch (unconfirmed)

The browser is not required to perform prefetching.

In theory, in suboptimal conditions such as slow connection speed, low battery, etc. the browser may decide to conserve resources and avoid prefetching (such as implemented in the quicklink library).

I tried triggering this behavior on my Android phone by disabling wifi, downgrading to 3G, then enabling data and battery saver. The Chrome browser slowly, but happily prefetched everything.

I was not able to observe the browser avoiding prefetching, but the possibility is there. Even if prefetching currently works all the time on all browsers supporting SXG, it may change in the future.

(Sub)resources expiring in Google SXG cache

Each SXG has an expiration date enforced by cryptographic signature validity. I found Google sometimes performs refresh requests, but I’m sure there are scenarios when the user’s browser fetches expired SXG.

I could not reproduce those errors reliably, but I observe them regularly in my monitoring system. This leads to breaking the SXG experience entirely if the main document is expired or partially if only one or more subresources are expired.

I was able to simulate the expired subresource error in the browser. In the Chrome Developer Tools’ Network tab, the failed request should have the following status:

(failed) net::ERR_INVALID_SIGNED_EXCHANGEIf you open the Preview tab in the request details you should see the following error:

Invalid timestamp. creation_time: 1739777019, expires_time: 1740381819, verification_time: 1740383698

Failed to verify the signed exchange header.Additionally, the Date and Expires in the Signature section should be marked in red.

Subresources being evicted from Google SXG cache (unconfirmed)

I suspect Google SXG cache entries can be removed before expiration due to several reasons, the most important is disk space conservation. When this happens, it may cause prefetching failures manifesting as CORS errors.

However, I was unable to reproduce it. I believe the cache should include an eviction mechanism, but I can also imagine other ways to conserve disk space.

Temporarily missing SXG certificates

I've written about these types of errors before, but for the sake of completeness, I'm posting it here as well.

I witnessed a few cases where a certificate used in the SXG signature was missing from the Google SXG cache. In those scenarios, the browser can’t validate the affected signature leading to the following subresource status in the Network tab of Chrome Developer Tools:

(failed) net::ERR_INVALID_SIGNED_EXCHANGEI'm not sure why these errors occur. I suspect it's an issue in Cloudflare ASX or Google SXG cache, so it can be fixed only by those companies. On the bright side, these errors should be rare and tend to disappear quickly.

Summary

This post is the final one in the category of explaining SXG errors. Here's what you've learned about them:

Non-temporary CORS errors while prefetching SXG pages indicate issues with Google’s SXG cache handling subresources.

Subresources must be immutable, including their HTTP headers. Common issues arise from the absence of the Vary header, Cloudflare's HTTP/2 prioritization, and Etag headers tied to modification times.

Size limitations: Each element of your page, including the HTML document and all subresources intended for prefetching, must be smaller than 1044 KB.

Avoid excessive prefetching: Using SXG to prefetch too much data can increase error rates.

Bot protection: If you want to protect your site from bots, always accept Googlebot requests. This requires configuring Cloudflare's WAF appropriately.

External factors: Some errors may arise from issues beyond your control.

In the next part, I will guide you on how to properly measure the impact of SXG on your website.

I hope you found this post helpful. Thank you for reading!